How we designed a coordinated system of specialized AI agents to automate front-end development—and why we chose agents over a single LLM

The Problem: Front-End Scaffolding at Scale

As our engineering team grew, we kept hitting the same bottleneck: spinning up new React applications. The process involved scaffolding a project, discovering which components from our internal library were relevant, integrating them correctly, and ensuring everything followed our conventions.

Traditional tools like Create React App handle the scaffolding, but they can't understand our component library or enforce our specific patterns. We needed something that could bridge the gap between a human idea ("build a budgeting dashboard") and a working application that properly uses our existing components.

Why Agents Instead of a Single LLM?

Our first instinct was to throw everything at GPT-4 with a massive prompt containing our entire component library and conventions. This approach had several problems:

Context window limitations: Our component documentation alone exceeded most models' context limits

Inconsistent focus: A single model trying to handle planning, component discovery, implementation, and verification often dropped balls

Debugging difficulty: When something went wrong, it was hard to tell which part of the process failed

Instead, we built a system modeled on how real development teams work: specialized roles with clear handoffs between them.

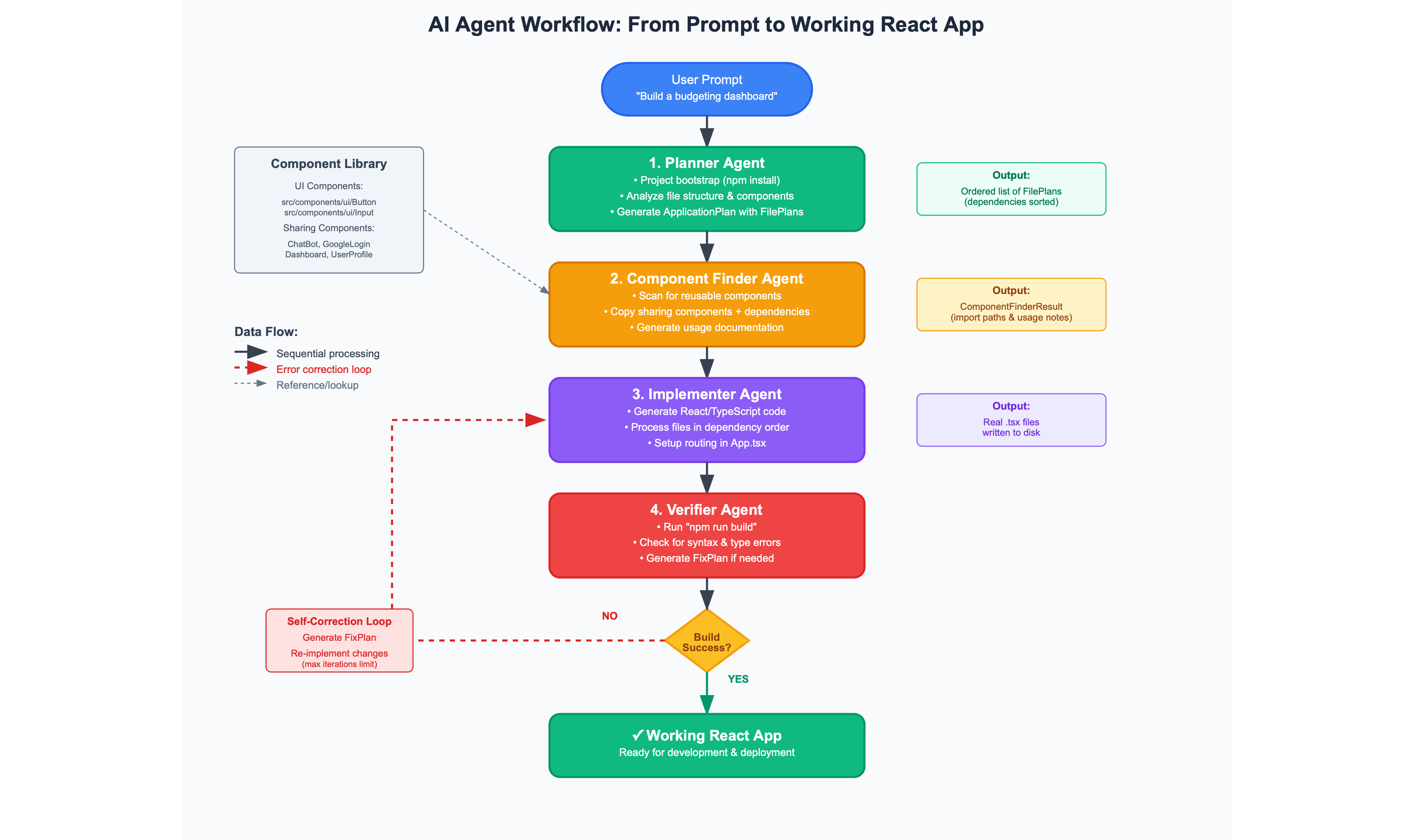

System Architecture: Four Specialized Agents

We implemented this as a directed graph (LangGraphPageBuilderWorkflow) that chains together four agents, each with a specific expertise:

1. The Planner Agent

Input: User prompt ("Build a budgeting dashboard")

Responsibilities:

- Bootstrap the project (folder creation, template copying,

npm install) - Analyze the current file structure and available components

- Generate an

ApplicationPlanwith specificFilePlansfor each needed file - Order files topologically to ensure dependencies are handled correctly

Technical challenge: Getting consistent structured output across different LLM providers while maintaining context about our project conventions.

2. The Component Finder Agent

Input: The ApplicationPlan from the Planner

Responsibilities:

- Scan the plan for opportunities to reuse existing components

- Copy sharing components and install their peer dependencies

- Record correct import paths for UI components

- Generate usage documentation for found components

Why this matters: This agent prevents the "rebuild everything from scratch" problem. It's like having a senior developer who knows every component in your codebase.

3. The Implementer Agent

Input: Sorted file plans and component integration notes

Responsibilities:

- Generate production-ready React/TypeScript code with Tailwind styling

- Process files in dependency order

- Inject the correct import paths identified by the Component Finder

- Handle routing setup in

src/App.tsx

Key constraint: Must strictly adhere to allowed npm packages and follow our coding conventions.

4. The Verifier Agent

Input: The complete generated codebase

Responsibilities:

- Run

npm run buildto test for syntax and type errors - If successful, end the workflow

- If failed, collect error logs and initiate self-correction

Critical insight: Most AI coding tools generate code and hope it works. We actually test it.

The Self-Correction Loop

When the build fails, we don't just give up. The Verifier analyzes the errors and creates a targeted FixPlan. The Implementer then makes specific changes based on this plan, and the Verifier rebuilds.

This mirrors how human developers debug: examine the error, form a hypothesis about the fix, implement it, and test again.

Current limitation: We cap this at a maximum number of iterations to prevent infinite loops. Complex errors sometimes require human intervention.

Technical Implementation Details

LLM Provider Abstraction

We built a lightweight wrapper that standardizes prompts across providers. For AWS Bedrock, we output XML (to avoid JSON formatting issues), while OpenAI uses JSON. This lets us swap models without changing agent logic.

State Management

Each agent receives exactly what it needs and produces a well-defined output for the next agent. The evolving project state flows through the graph predictably.

Error Handling

We categorize build errors into types (missing imports, type errors, component mismatches) and have specific fix strategies for each category.

What We've Learned So Far

What Works Well:

- Component discovery: The system is surprisingly good at finding relevant components from our library

- Convention compliance: Generated code consistently follows our folder structure and naming patterns

- Error categorization: Build failures follow predictable patterns we can address systematically

Current Limitations:

- Complex state management: Apps requiring Redux or complex state sharing often need human refinement

- Novel UI patterns: Anything not represented in our component library is hit-or-miss

- Performance optimization: Generated code works but isn't always optimal

- Cost: Running four specialized agents isn't cheap—this is definitely more expensive than a single LLM call

Interesting Discoveries:

- Specialization helps debugging: When something goes wrong, it's usually clear which agent is responsible

- Documentation quality matters: Well-documented components get integrated correctly; poorly documented ones cause failures

- Build verification is essential: Static analysis misses many issues that only surface during compilation

When to Use This Approach

This system works well for:

- Internal prototypes: Quick POCs that need to look professional

- Standardized applications: CRUD interfaces, dashboards, admin panels

- Component library showcases: Demonstrating how different components work together

It's not suitable for:

- Customer-facing applications: Design requirements are too specific

- Performance-critical code: Generated code prioritizes correctness over optimization

- Novel interaction patterns: Anything requiring creative UI solutions

Trade-offs and Considerations

Benefits:

- Consistency: Every generated app follows the same conventions

- Component reuse: Automatic discovery and integration of existing components

- Speed: Much faster than manual scaffolding for standard use cases

Costs:

- Compute expenses: Multiple LLM calls per generation

- Maintenance overhead: Prompts need updates as the codebase evolves

- Infrastructure complexity: More moving parts than a simple script

What's Next

We're still early with this system, but the next areas we're exploring:

Short-term improvements:

- Better error analysis to reduce self-correction iterations

- Cost optimization by using smaller models for simpler tasks

- Integration with our existing CI/CD pipeline

Future possibilities:

- Backend API generation to match the frontend

- Automated test generation (though early experiments weren't promising)

- Integration with design systems for better UI consistency

The Real Value Proposition

This isn't about replacing developers—it's about removing friction from the creative process. When spinning up a new idea doesn't require context switching to set up boilerplate, developers can stay focused on the interesting problems.

The system works best when you have:

- A mature component library

- Strong conventions and patterns

- Frequent need for similar types of applications

- Budget for compute costs

Bottom line: It's a tool for a specific use case, not a universal solution. But for that use case—generating standardized React applications that properly integrate your existing components—it's proving quite valuable.

The techniques described here are part of a broader trend toward agent-based software development. While the tooling is still evolving, the core insight—specialized AI agents working together—seems promising for automating repetitive development tasks.

Want to see it in action? Watch our agents build a complete React app from scratch in this demo video